Image2Text AI App with Anthropic

Guide overview #

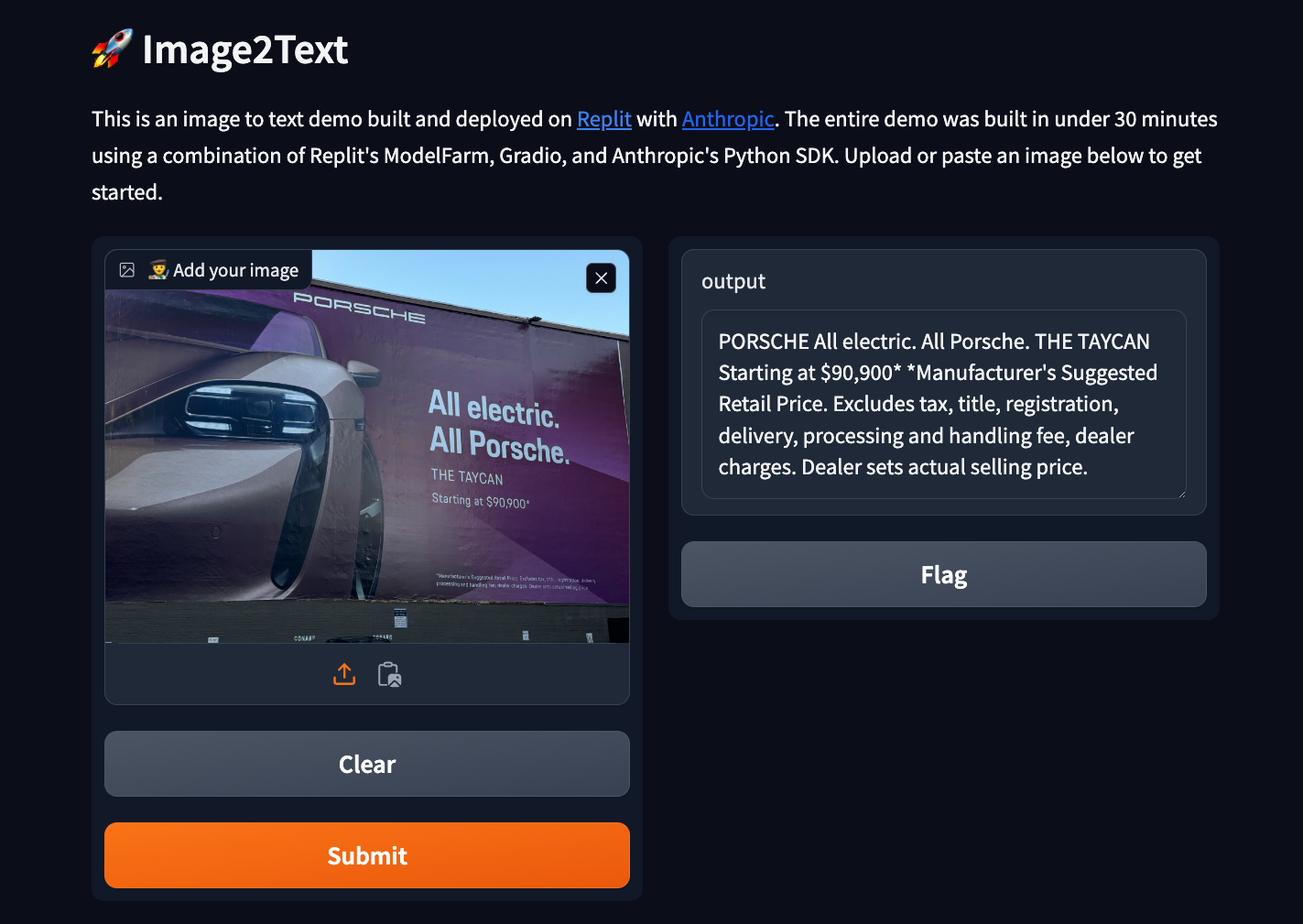

This guide provides a step-by-step walkthrough to build an Image2Text AI app. The app allows a user to upload an image, and Anthropic's Claude Model will extract the text. Your final deployed app will look like this:

Getting started #

Start by forking this template. Click "Use template" and label your project.

Add Anthropic API key to Secrets #

First, we need to create an Anthropic API key. Go to the dashboard and create an API key. API Keys are sensitive information, so we want to store them securely.

In the bottom-left corner of the Replit workspace, there is a section called "Tools." Select "Secrets" within the Tools pane, and add your Anthropic API Key to the Secret labeled "ANTHROPIC_API_KEY."

Import necessary modules and set up the Anthropic client #

Begin by importing the necessary modules and setting up the Anthropic client with your API key. Paste the following code to your main.py file:

Here's a quick overview of the packages we are using:

- The base64 module is used for encoding images

- os for accessing environment variables

- anthropic for interacting with the Anthropic API

- gradio for creating the web interface

- ratelimit for handling rate limiting

Building the application #

We will now create utility functions. Utility functions are pre-defined processed that the Large Language Model (LLM) can use. We will create two:

- image_to_base64 for converting images to base64 encoding

- get_media_type for determining the media type of the uploaded image based on its file extension.

Add this code to the bottom of main.py:

These functions are used to prepare the image data for sending to the Anthropic API.

Implement the image-to-text functionality with rate limiting #

We now want to create a function that converts the image to text. We also want to create rate limiting. Expensive queries like this can be abused, which leads to large overages. Rate limiting is a way to make sure the request is denied if a user is abusing the service.

The image_to_text function takes the base64-encoded image and media type as input, sends it to the Anthropic API along with a prompt, and extracts the text from the response.

Add this code to the bottom of main.py:

The @limits decorator is used to limit the number of calls to this function to 5 calls every 30 minutes (1800 seconds) to avoid exceeding the API rate limits.

Create the Gradio web interface #

Create the Gradio web interface using the gr.Blocks and gr.Interface components. Define the app function that handles the image upload, calls the image_to_text function, and returns the extracted text.

Add this code to the bottom of main.py:

The gr.Blocks component is used to create the overall structure of the web interface, and gr.Interface is used to define the input (image upload) and output (extracted text) components. The demo.launch() function is called to start the web interface.

Deploy your project #

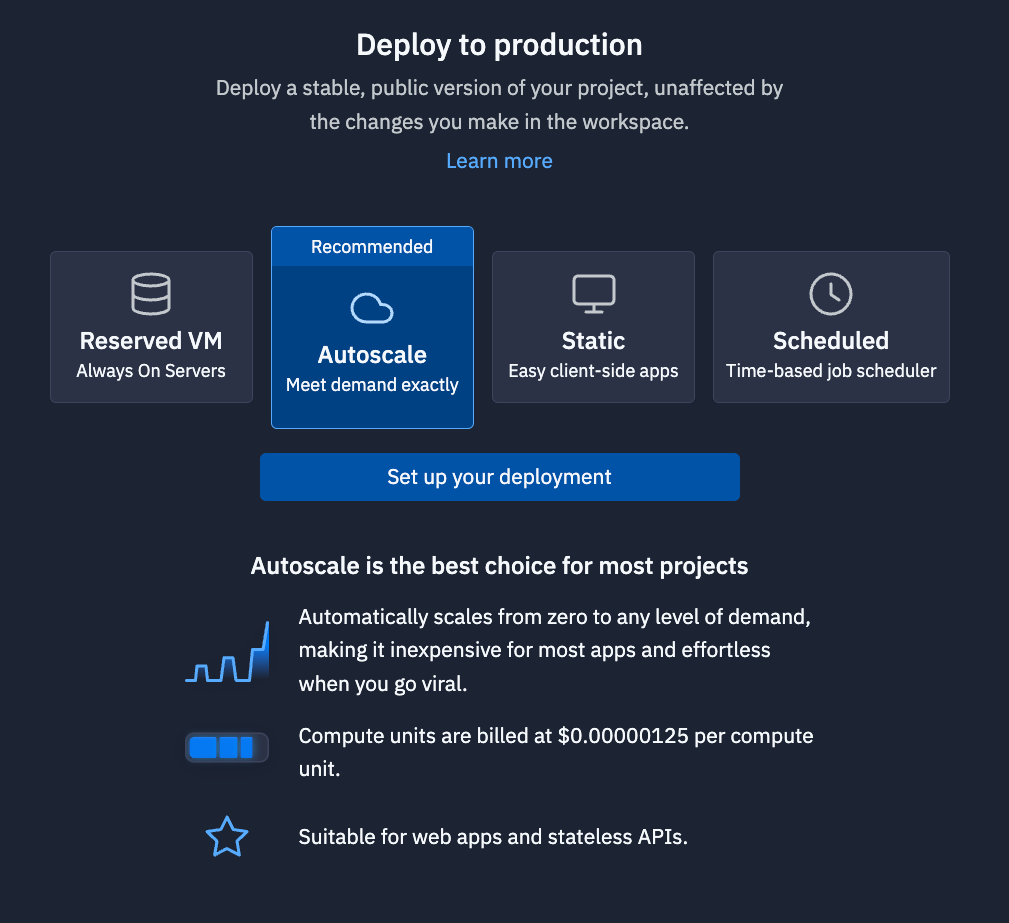

Your Webview uses a replit.dev URL. The development URL is good for rapid iteration, but it will stop working shortly after you close Replit. To hava a permanent URL that you can share, you need to Deploy your project.

Click "Deploy" in the top-right of the Replit Workspace, and you will see the Deployment pane open. For more information on our Deployment types, check our documentation.

Once your application is deployed, you will received a replit.app domain that you can share.

What next #

Check out other guides on our guides page. Share your deployed URL with us on social media, so we can amplify.